Immersive and Situated Analytics

Visualization researchers need to develop and adapt to today’s new devices and tomorrow’s interface technology. Today, people interact with visual depictions mainly through a mouse and, gradually, by touch. Tomorrow, they will be touching, grasping, feeling, hearing, smelling, and even tasting data.

This theme explores the concept of immersive, situated, multisensory, post-WIMP visualization and investigates how information visualization can employ different display and presentation technologies, such as head-mounted displays, projection systems, wearables, tabletop displays and haptic interfaces. Immersive and Situated Analytics (IA/SA) activities are a core theme of the XReality, Visualization and Analytics (XRVA) lab).

Publications

M. Borowski, P. W. S. Butcher, J. B. Kristensen, J. O. Petersen, P. D. Ritsos, C. N. Klokmose, and N. Elmqvist, “DashSpace: A Live Collaborative Platform for Immersive and Ubiquitous Analytics,” IEEE Transactions on Visualization and Computer Graphics (To appear), 2025.

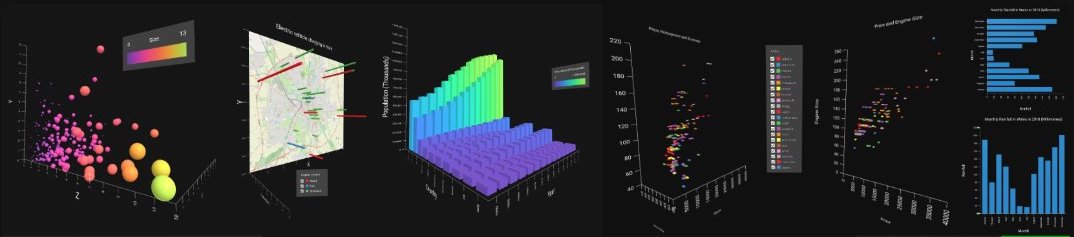

We introduce DashSpace, a live collaborative immersive and ubiquitous analytics (IA/UA) platform designed for handheld and head-mounted Augmented/Extended Reality (AR/XR) implemented using WebXR and open standards. To bridge the gap between existing web-based visualizations and the immersive analytics setting, DashSpace supports visualizing both legacy D3 and Vega-Lite visualizations on 2D planes, and extruding Vega-Lite specifications into 2.5D. It also supports fully 3D visual representations using the Optomancy grammar. To facilitate authoring new visualizations in immersive XR, the platform provides a visual authoring mechanism where the user groups specification snippets to construct visualizations dynamically. The approach is fully persistent and collaborative, allowing multiple participants—whose presence is shown using 3D avatars and webcam feeds—to interact with the shared space synchronously, both co-located and remotely. We present three examples of DashSpace in action: immersive data analysis in 3D space, synchronous collaboration, and immersive data presentations.

[Abstract]

[Details]

[PDF]

[doi:10.1109/TVCG.2025.3537679]

[To be presented at IEEE VIS 2025]

A. Srinivasan, J. Ellemose, P. W. S. Butcher, P. D. Ritsos, and N. Elmqvist, “Attention-Aware Visualization: Tracking and Responding to User Perception Over Time,” IEEE Transactions on Visualization and Computer Graphics, 2025.

We propose the notion of Attention-Aware Visualizations (AAVs) that track the user’s perception of a visual representation over time and feed this information back to the visualization. Such context awareness is particularly useful for ubiquitous and immersive analytics where knowing which embedded visualizations the user is looking at can be used to make visualizations react appropriately to the user’s attention: for example, by highlighting data the user has not yet seen. We can separate the approach into three components: (1) measuring the user’s gaze on a visualization and its parts; (2) tracking the user’s attention over time; and (3) reactively modifying the visual representation based on the current attention metric. In this paper, we present two separate implementations of AAV: a 2D data-agnostic method for web-based visualizations that can use an embodied eyetracker to capture the user’s gaze, and a 3D data-aware one that uses the stencil buffer to track the visibility of each individual mark in a visualization. Both methods provide similar mechanisms for accumulating attention over time and changing the appearance of marks in response. We also present results from a qualitative evaluation studying visual feedback and triggering mechanisms for capturing and revisualizing attention.

[Abstract]

[Details]

[PDF]

[Preprint]

[doi:10.1109/TVCG.2024.3456300]

[Presented at IEEE VIS 2024]

S. Shin, A. Batch, P. W. S. Butcher, P. D. Ritsos, and N. Elmqvist, “The Reality of the Situation: A Survey of Situated Analytics,” IEEE Transactions on Visualization and Computer Graphics, vol. 30, no. 8, pp. 5147–5164, Aug. 2024.

The advent of low-cost, accessible, and high-performance augmented reality (AR) has shed light on a situated form of analytics where in-situ visualizations embedded in the real world can facilitate sensemaking based on the user’s physical location. In this work, we identify prior literature in this emerging field with a focus on situated analytics. After collecting 47 relevant situated analytics systems, we classify them using a taxonomy of three dimensions: situating triggers, view situatedness, and data depiction. We then identify four archetypical patterns in our classification using an ensemble cluster analysis. We also assess the level which these systems support the sensemaking process. Finally, we discuss insights and design guidelines that we learned from our analysis.

[Abstract]

[Details]

[PDF]

[doi:10.1109/TVCG.2023.3285546]

[Presented at IEEE VIS 2023]

P. W. S. Butcher, A. Batch, D. Saffo, B. MacIntyre, N. Elmqvist, and P. D. Ritsos, “Is Native Naïve? Comparing Native Game Engines and WebXR as Immersive Analytics Development Platforms,” IEEE Computer Graphics and Applications, vol. 44, no. 3, pp. 91–98, May 2024.

Native game engines have long been the 3D development platform of choice for research in mixed and augmented reality. For this reason they have also been adopted in many immersive visualization and immersive analytics systems and toolkits. However, with the rapid improvements of WebXR and related open technologies, this choice may not always be optimal for future visualization research. In this paper, we investigate common assumptions about native game engines vs. WebXR and find that while native engines still have an advantage in many areas, WebXR is rapidly catching up and is superior for many immersive analytics applications.

[Abstract]

[Details]

[PDF]

[doi:10.1109/MCG.2024.3367422]

A. Batch, P. W. S. Butcher, P. D. Ritsos, and N. Elmqvist, “Wizualization: A ’Hard Magic’ Visualization System for Immersive and Ubiquitous Analytics,” IEEE Transactions on Visualization and Computer Graphics, vol. 30, no. 1, pp. 507–517, Jan. 2024.

What if magic could be used as an effective metaphor to perform data visualization and analysis using speech and gestures while mobile and on-the-go? In this paper, we introduce Wizualization, a visual analytics system for eXtended Reality (XR) that enables an analyst to author and interact with visualizations using such a magic system through gestures, speech commands, and touch interaction. Wizualization is a rendering system for current XR headsets that comprises several components: a cross-device (or Arcane Focuses) infrastructure for signalling and view control (Weave), a code notebook (SpellBook), and a grammar of graphics for XR (Optomancy). The system offers users three modes of input: gestures, spoken commands, and materials. We demonstrate Wizualization and its components using a motivating scenario on collaborative data analysis of pandemic data across time and space.

[Abstract]

[Details]

[PDF]

[doi:10.1109/TVCG.2023.3326580]

[Presented at IEEE VIS 2023]

P. W. S. Butcher, A. Batch, P. D. Ritsos, and N. Elmqvist, “Don’t Pull the Balrog — Lessons Learned from Designing Wizualization: a Magic-inspired Data Analytics System in XR,” in HybridUI: 1st Workshop on Hybrid User Interfaces: Complementary Interfaces for Mixed Reality Interaction, 2023.

This paper presents lessons learned in the design and development of Wizualization, a ubiquitous analytics system for authoring visualizations in WebXR using a magic metaphor. The system is based on a fundamentally hybrid and multimodal approach utilizing AR/XR, gestures, sound, and speech to support the mobile setting. Our lessons include how to overcome mostly technical challenges, such as view management and combining multiple sessions in the same analytical 3D space, but also user-based, design-oriented, and even social ones. Our intention in sharing these teachings is to help fellow travellers navigate the same troubled waters we have traversed.

[Abstract]

[Details]

[PDF]

A. Batch, S. Shin, J. Liu, P. W. S. Butcher, P. D. Ritsos, and N. Elmqvist, “Evaluating View Management for Situated Visualization in Web-based Handheld AR,” Computer Graphics Forum, vol. 42, no. 3, pp. 349–360, Jun. 2023.

As visualization makes the leap to mobile and situated settings, where data is increasingly integrated with the physical world using mixed reality, there is a corresponding need for effectively managing the immersed user’s view of situated visualizations. In this paper we present an analysis of view management techniques for situated 3D visualizations in handheld augmented reality: a shadowbox, a world-in-miniature metaphor, and an interactive tour. We validate these view management solutions through a concrete implementation of all techniques within a situated visualization framework built using a web-based augmented reality visualization toolkit, and present results from a user study in augmented reality accessed using handheld mobile devices.

[Abstract]

[Details]

[PDF]

[doi:10.1111/cgf.14835]

[Presented at EG EuroVis 2023]

P. W. S. Butcher, N. W. John, and P. D. Ritsos, “VRIA: A Web-based Framework for Creating Immersive Analytics Experiences,” IEEE Transactions on Visualization and Computer Graphics, vol. 27, no. 07, pp. 3213–3225, Jul. 2021.

We present <VRIA>, a Web-based framework for creating Immersive Analytics (IA) experiences in Virtual Reality. <VRIA> is built upon WebVR, A-Frame, React and D3.js, and offers a visualization creation workflow which enables users, of different levels of

expertise, to rapidly develop Immersive Analytics experiences for the Web. The use of these open-standards Web-based technologies

allows us to implement VR experiences in a browser and offers strong synergies with popular visualization libraries, through the HTML

Document Object Model (DOM). This makes <VRIA> ubiquitous and platform-independent. Moreover, by using WebVR’s progressive

enhancement, the experiences <VRIA> creates are accessible on a plethora of devices. We elaborate on our motivation for focusing on

open-standards Web technologies, present the <VRIA> creation workflow and detail the underlying mechanics of our framework. We also

report on techniques and optimizations necessary for implementing Immersive Analytics experiences on the Web, discuss scalability

implications of our framework, and present a series of use case applications to demonstrate the various features of <VRIA>. Finally, we

discuss current limitations of our framework, the lessons learned from its development, and outline further extensions.

[Abstract]

[Details]

[PDF]

[doi:10.1109/TVCG.2020.2965109]

[Presented at IEEE VIS 2020]

P. W. S. Butcher, N. W. John, and P. D. Ritsos, “VRIA - A Framework for Immersive Analytics on the Web,” in Extended Abstracts of the CHI Conference on Human Factors in Computing Systems (ACM CHI 2019), Glasgow, UK, 2019.

We report on the design, implementation and evaluation of VRIA, a framework for building immersive analytics (IA) solutions in Web-based Virtual Reality (VR), built upon WebVR, A-Frame, React and D3. The recent emergence of affordable VR interfaces have reignited the interest of researchers and developers in exploring new, immersive ways to visualize data. In particular, the use of open-standards web-based technologies for implementing VR in a browser facilitates the ubiquitous and platform-independent adoption of IA systems. Moreover, such technologies work in synergy with established visualization libraries, through the HTML document object model (DOM). We discuss high-level features of VRIA and present a preliminary user experience evaluation of one of our use cases.

[Abstract]

[Details]

[PDF]

[doi:10.1145/3290607.3312798]

R. L. Williams, D. Farmer, J. C. Roberts, and P. D. Ritsos, “Immersive visualisation of COVID-19 UK travel and US happiness data,” in Posters presented at the IEEE Conference on Visualization (IEEE VIS 2020), Virtual Event, 2020.

The global COVID-19 pandemic has had great affect on the lives of everyone, from changing how children are educated to how or whether at all, we travel, go to work or do our shopping. Consequently, not only has people’s happiness changed throughout the pandemic, but there has been less vehicles on the roads. We present work to visualise both US happiness and UK travel data, as examples, in immersive environments. These impromptu visualisations encourage discussion and engagement with these topics, and can help people see the data in an alternative way.

[Abstract]

[Details]

[PDF]

P. W. S. Butcher, N. W. John, and P. D. Ritsos, “Towards a Framework for Immersive Analytics on the Web,” in Posters presented at the IEEE Conference on Visualization (IEEE VIS 2018), Berlin, Germany, 2018.

We present work-in-progress on the design and implementation of a Web framework for building Immersive Analytics (IA) solutions in Virtual Reality (VR). We outline the design of our prototype framework, VRIA, which facilitates the development of VR spaces for IA solutions, which can be accessed via a Web browser. VRIA is built on emerging open-standards Web technologies such as WebVR, A-Frame and React, and supports a variety of interaction devices (e.g., smartphones, head-mounted displays etc.). We elaborate on our motivation for focusing on open-standards Web technologies and provide an overview of our framework. We also present two early visualization components. Finally, we outline further extensions and investigations.

[Abstract]

[Details]

[PDF]

P. D. Ritsos, J. Mearman, J. R. Jackson, and J. C. Roberts, “Synthetic Visualizations in Web-based Mixed Reality,” in Immersive Analytics: Exploring Future Visualization and Interaction Technologies for Data Analytics Workshop, IEEE Conference on Visualization (VIS), Phoenix, Arizona, USA, 2017.

The way we interact with computers is constantly evolving, with technologies like Mixed/Augmented Reality (MR/AR) and the Internet of Things (IoT) set to change our perception of informational and physical space. In parallel, interest for interacting with data in new ways is driving the investigation of the synergy of these domains with data visualization. We are seeking new ways to contextualize, visualize, interact-with and interpret our data. In this paper we present the notion of Synthetic Visualizations, which enable us to visualize in situ, data embedded in physical objects, using MR. We use a combination of established ‘markers’, such as Quick Response Codes (QR Codes) and Augmented Reality Markers (AR Markers), not only to register objects in physical space, but also to contain data to be visualized, and interchange the type of visualization to be used. We visualize said data in Mixed Reality (MR), using emerging web-technologies and open-standards.

[Abstract]

[Details]

[PDF]

P. D. Ritsos, J. Jackson, and J. C. Roberts, “Web-based Immersive Analytics in Handheld Augmented Reality,” in Posters presented at the IEEE Conference on Visualization (IEEE VIS 2017), Phoenix, Arizona, USA, 2017.

The recent popularity of virtual reality (VR), and the emergence of a number of affordable VR interfaces, have prompted researchers and developers to explore new, immersive ways to visualize data. This has resulted in a new research thrust, known as Immersive Analytics (IA). However, in IA little attention has been given to the paradigms of augmented/mixed reality (AR/MR), where computer-generated and physical objects co-exist. In this work, we explore the use of contemporary web-based technologies for the creation of immersive visualizations for handheld AR, combining D3.js with the open standards-based Argon AR framework and A-frame/WebVR. We argue in favor of using emerging standards-based web technologies as they work well with contemporary visualization tools, that are purposefully built for data binding and manipulation.

[Abstract]

[Details]

[PDF]

P. W. Butcher and P. D. Ritsos, “Building Immersive Data Visualizations for the Web,” in Proceedings of International Conference on Cyberworlds (CW’17), Chester, UK, 2017.

We present our early work on building prototype applications for Immersive Analytics using emerging standardsbased web technologies for VR. For our preliminary investigations we visualize 3D bar charts that attempt to resemble recent physical visualizations built in the visualization community. We explore some of the challenges faced by developers in working with emerging VR tools for the web, and in building effective and informative immersive 3D visualizations.

[Abstract]

[Details]

[PDF]

[doi:10.1109/CW.2017.11]

P. W. S. Butcher, J. C. Roberts, and P. D. Ritsos, “Immersive Analytics with WebVR and Google Cardboard,” in Posters presented at the IEEE Conference on Visualization (IEEE VIS 2016), Baltimore, MD, USA, 2016.

We present our initial investigation of a low-cost, web-based virtual reality platform for immersive analytics, using a Google Cardboard, with a view of extending to other similar platforms such as Samsung’s Gear VR. Our prototype uses standards-based emerging frameworks, such as WebVR and explores some the challenges faced by developers in building effective and informative immersive 3D visualizations, particularly those that attempt to resemble recent physical visualizations built in the community.

[Abstract]

[Details]

[PDF]

J. C. Roberts, P. D. Ritsos, S. K. Badam, D. Brodbeck, J. Kennedy, and N. Elmqvist, “Visualization Beyond the Desktop - the next big thing,” IEEE Computer Graphics and Applications, vol. 34, no. 6, pp. 26–34, Nov. 2014.

Visualization is coming of age. With visual depictions being seamlessly integrated into documents, and data visualization techniques being used to understand increasingly large and complex datasets, the term "visualization"’ is becoming used in everyday conversations. But we are on a cusp; visualization researchers need to develop and adapt to today’s new devices and tomorrow’s technology. Today, people interact with visual depictions through a mouse. Tomorrow, they’ll be touching, swiping, grasping, feeling, hearing, smelling, and even tasting data. The next big thing is multisensory visualization that goes beyond the desktop.

[Abstract]

[Details]

[PDF]

[doi:10.1109/MCG.2014.82]

[Presented at IEEE VIS 2015]

P. D. Ritsos, J. W. Mearman, A. Vande Moere, and J. C. Roberts, “Sewn with Ariadne’s Thread - Visualizations for Wearable & Ubiquitous Computing,” in Death of the Desktop Workshop, IEEE Conference on Visualization (VIS), Paris, France, 2014.

Lance felt a buzz on his wrist, as Alicia, his wearable, informed him via the bone-conduction ear-piece - ‘You have received an email from Dr Jones about the workshop’. His wristwatch displayed an unread email glyph icon. Lance tapped it and listened to the voice of Dr Jones, talking about the latest experiment. At the same time he scanned through the email attachments, projected in front of his eyes, through his contact lenses. One of the files had a dataset of a carbon femtotube structure

[Abstract]

[Details]

[PDF]

J. C. Roberts, J. W. Mearman, and P. D. Ritsos, “The desktop is dead, long live the desktop! – Towards a multisensory desktop for visualization,” in Death of the Desktop Workshop, IEEE Conference on Visualization (VIS), Paris, France, 2014.

“Le roi est mort, vive le roi!”; or “The King is dead, long live the King” was a phrase originally used for the French throne of Charles VII in 1422, upon the death of his father Charles VI. To stave civil unrest the governing figures wanted perpetuation of the monarchs. Likewise, while the desktop as-we-know-it is dead (the use of the WIMP interface is becoming obsolete in visualization) it is being superseded by a new type of desktop environment: a multisensory visualization space. This space is still a personal workspace, it’s just a new kind of desk environment. Our vision is that data visualization will become more multisensory, integrating and demanding all our senses (sight, touch, audible, taste, smell etc.), to both manipulate and perceive the underlying data and information.

[Abstract]

[Details]

[PDF]

Collaborators (in various publications)

Aarhus University, University of Maryland - College Park, Edinburgh Napier University, University of Applied Sciences and Arts Northwestern Switzerland College Park, University of Chester