Paper accepted by UIST'25

Our paper “Spatialstrates: Cross-Reality Collaboration through Spatial Hypermedia has been accepted to the 38th Annual ACM Symposium on User Interface Software and Technology (UIST 2025), to be held in Busan, South Korea, in September 2025.

This article continues the work on immersive toolkits, explored initially with DashSpace, and extends it towards the theme of cross-reality collaboration through spatial hypermedia.

Contemporary consumer-level XR hardware enables immersive spatial computing, yet most knowledge work remains confined to traditional 2D desktop environments. These worlds exist in isolation: writing emails or editing presentations favors desktop interfaces, while viewing 3D simulations or architectural models benefits from immersive environments.

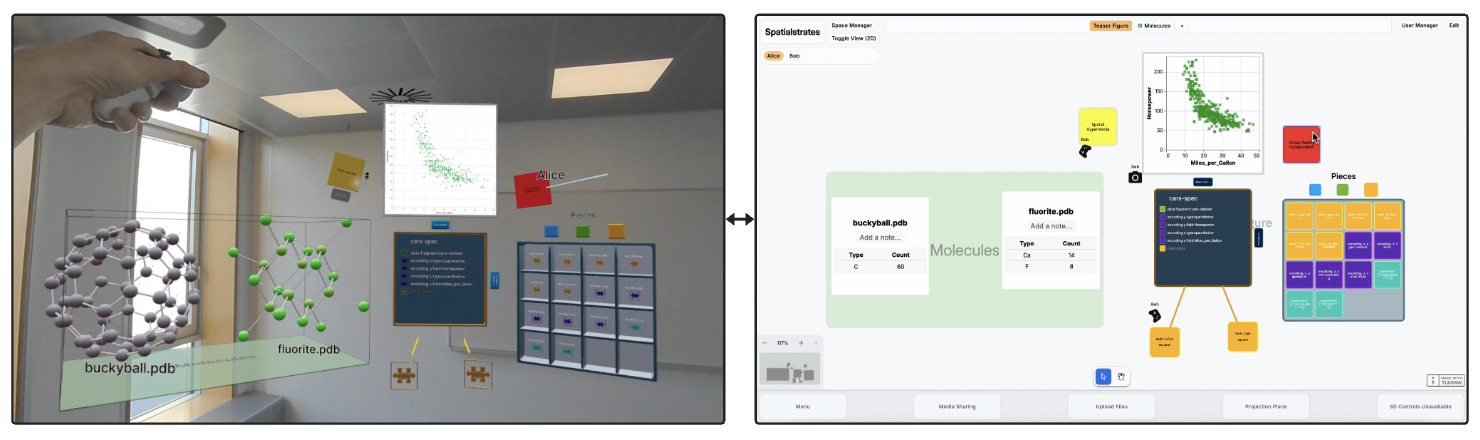

We address this fragmentation by combining spatial hypermedia, shareable dynamic media, and cross-reality computing to provide (1) composability of heterogeneous content and of nested information spaces through spatial transclusion,(2) pervasive cooperation across heterogeneous devices and platforms, and (3) congruent spatial representations despite underlying environmental differences.

Our implementation, the Spatialstrates platform, embodies these principles using standard web technologies to bridge 2D desktop and 3D immersive environments.

Reference

M. Borowski, J. E. Grønbæk, P. W. S. Butcher, P. D. Ritsos, C. N. Klokmose, and N. Elmqvist, “Spatialstrates: Cross-Reality Collaboration through Spatial Hypermedia,” in Proceedings of the The 38th Annual ACM Symposium on User Interface Software and Technology (UIST 2025), New York, NY, USA, 2025.

Consumer-level XR hardware now enables immersive spatial computing, yet most knowledge work remains confined to traditional 2D desktop environments. These worlds exist in isolation: writing emails or editing presentations favors desktop interfaces, while viewing 3D simulations or architectural models benefits from immersive environments. We address this fragmentation by combining spatial hypermedia, shareable dynamic media, and cross-reality computing to provide (1) composability of heterogeneous content and of nested information spaces through spatial transclusion,(2) pervasive cooperation across heterogeneous devices and platforms, and (3) congruent spatial representations despite underlying environmental differences. Our implementation, the Spatialstrates platform, embodies these principles using standard web technologies to bridge 2D desktop and 3D immersive environments. Through four scenarios—collaborative brainstorming, architectural design, molecular science visualization, and immersive analytics—we demonstrate how Spatialstrates enables collaboration between desktop 2D and immersive 3D contexts, allowing users to select the most appropriate interface for each task while maintaining collaborative capabilities.

[Abstract]

[Details]

[PDF]

[doi:10.1145/3746059.3747708]